Darshan Prabhu from the Content Analysis- Audio team summarises the research paper titled ‘Efficient infusion of self-supervised representations in Automatic Speech Recognition’ which he has co-authored with Sai Ganesh Mirishkar and Pankaj Wasnik from Sony Research India.

This paper will be presented by the team at the Poster Session at the Neural Information Processing Systems (NeurIPS) 3rd Workshop on Efficient Natural Language and Speech Processing in December 2023, hosted in New Orleans from 10th-16th December 2023.

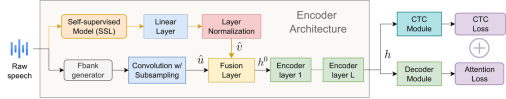

Self-supervised learned (SSL) models such as Wav2vec and HuBERT have demonstrated state-of-the-art results on speech-related tasks. Given the effectiveness of such models, it is advantageous to use them in conventional Automatic Speech Recognition (ASR) systems. While some approaches suggest incorporating these models as an encoder or a frontend, training such systems is extremely slow and requires a lot of computation cycles. If you have a limited training budget, an alternative approach is to use only the representations from these SSL models instead of directly using them as part of your neural network. Since the focus now is solely on the representations, they can be extracted beforehand as part of pre-processing (which can be easily done with parallel jobs). This one-time step eliminates the need for the SSL model during training, significantly reducing training time while sacrificing a minimal amount of efficiency. It is worth noting that this approach is not new and has already been explored in the NLP setting, where representations from models like BERT are employed in the context of neural machine translation ([2002.06823] Incorporating BERT into Neural Machine Translation).

Figure 1

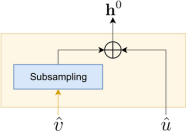

Figure 2

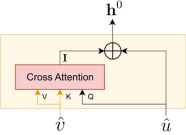

Figure 3

For the fusion layer, we have explored two straightforward approaches, as depicted in Figures 2 and 3. Figure 2 provides an overview of Framewise addition-based fusion, which capitalizes on the linear relationship between the lengths of the representations. It uses subsampling to ensure that both representations are of equal length before performing frame-level addition. On the other hand, Figure 3 demonstrates the utilization of cross-attention to merge the representations. This approach is not dependent on the lengths of the representations and can accommodate representations of any size. Further information regarding these approaches can be found in our paper.

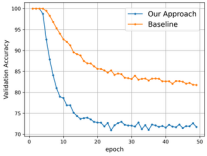

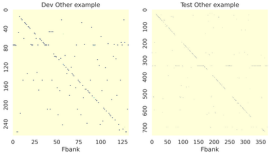

Figure 4

Figure 5

To know more about Sony Research India’s Research Publications, visit the ‘Publications’ section on our ‘Open Innovation’s page: