Brijraj Singh and Sonal Dabral summarize their paper that was recently accepted at the IJCNN conference in Australia, a premier conference in the area of neural networks theory, analysis and applications.

Our research introduces a versatile, multi-modal, and multi-task framework that effectively integrates various modalities, including audio, video, text, and subtitles from movie trailers. This integration is accomplished through the CentralNet fusion methodology, resulting in a consolidated representation known as the item representation. This, combined with the user representation, is then input into a multi-task architecture. The primary task involves predicting ratings, while auxiliary tasks encompass genre prediction, user age group determination, and user gender identification. Two versions of the multi-task architecture, namely fully shared and shared private, were employed.

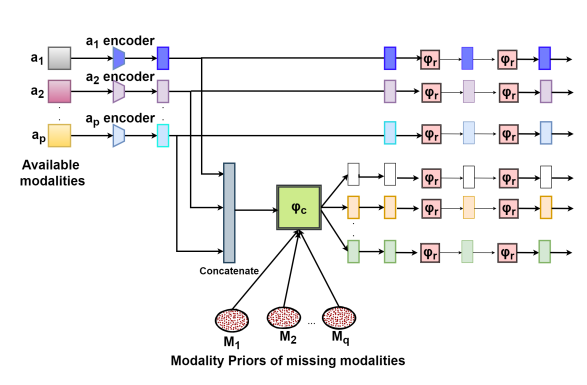

The study also addresses real-world scenarios where certain modalities may be absent due to privacy considerations. To overcome this challenge, a Bayesian meta-learning framework was implemented to reconstruct missing modalities using the available ones. Ultimately, the proposed model demonstrated superior performance compared to existing state-of-the-art multimodal recommendation models. This was evident through evaluations on two widely used recommendation datasets: Movielens-100K and MMTF-14K.

In general, our framework not only excels in integrating diverse modalities but also offers robust solutions for scenarios with missing modalities, thereby advancing the field of multimodal recommendation systems. Our results underscore the effectiveness of proposed approach, setting a new benchmark for future research in this domain.